This post will mostly just restate what I said here, so you can skip it if you read that post. But with absurd AI talk running wild - right now discussion of this topic is one of the least responsible moments I can remember in contemporary media history, which is really saying something - I’m moved again to make a basic point about large language models like ChatGPT.

Decades ago, a computer scientist named Terry Winograd pointed out that there’s no such thing as a system that can truly master language without a theory of the world. That is to say, as the science of meaning, semantics cannot be shorn from the world that produces meaning; to understand and speak effectively words must be understood and for words to be understood they must be compared to a universe that is apprehended with something like a conscious mind. You can see this by looking at the relationship between coindexing, the process of assigning pronouns to the nouns they refer to, and parsing natural language. A commenter on this newsletter proposed a good example.

The ball broke the table because it was made of concrete.

The ball broke the table because it was made of cardboard.

These two sentences are grammatically identical and differ only by the material specified. And yet 99 out of 100 human beings will say that, in the first sentence, “it” refers to the ball, while in the second, “it” refers to the table. Why? Because concrete tables don't break if you drop balls on them, and balls don’t break tables if they (the balls) are made out of cardboard. In other words, we can coindex these pronouns because we have a theory of the world - we have a sense of how the universe functions that informs our linguistic parsing. And this, fundamentally, is a key difference between human intelligence and a large language model. ChatGPT might get the coindexing right for any given set of sentences, depending on what response its model finds more quantitatively probable. But it won’t do so consistently, and even if it does, it’s not doing so because it has a mechanistic, cause-and-effect model of the world the way that you and I do. Instead, it’s using its vast data sets and complex models to generate a statistical association between terms and produce a probabilistic response to a question. Fundamentally, it’s driven by the distributional hypothesis, the notion that understanding can be derived from the proximal relationships between words in use. It does so by taking advantage of unfathomably vast data sets and parameters and guardrails of immense complexity. But at its root, like all large language models ChatGPT is making educated inferences about what a correct answer might be based on its training data. It isn’t looking at how the world works and coming to a conclusion. Indeed, there is no place within ChatGPT where that “looking” could occur or where a conclusion would arise. It can’t know.

In grad school I spent a couple months looking at primitive latent semantic analysis models, trying to understand them with my meager abilities. The models I was learning about were orders of magnitude less complex, and less functional, than ChatGPT. But at their root they did the same thing as these LLMs: look at text, note the distribution of tokens in that text, and come up with likely strings of tokens based on that distribution of tokens.

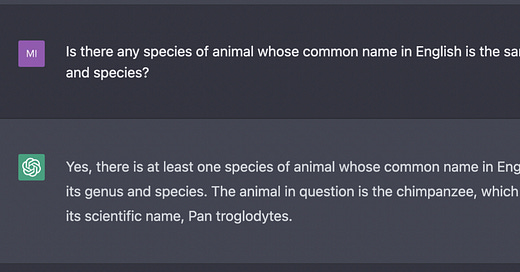

In the example in the image at the top, ChatGPT produces a nonsensical response because it’s generating its answer based on how probable the answer appears to its engine. And it doesn’t notice that its response is nonsensical because there is no “noticer” within ChatGPT. There is no consciousness that can notice anything at all, including that an answer completely violates the basic demands of a given question. I’m sure that ChatGPT has error-checking functions, but those error-checking functions are likely a) more application of distributional semantics and b) guardrails programmed in deliberately to avoid wrong (or more likely offensive) answers, which aren’t responsive to emergent conditions in the way that makes these systems impressive. ChatGPT has no concept of error beyond the distribution of the word “error” compared to other words in its large data sets and the probabilistic model it develops of the proximal relationship between “error” and other words. And this, simply, is not intelligence, much less consciousness. Sometimes people who really really want general AI to be here will suggest that behind human thinking there’s just a probabilistic engine like ChatGPT. But there’s no evidence for this, it defies the lived experience of how we think, and people like David Chalmers, Daniel Dennett, Noam Chomsky, and Douglas Hofstadter have presented persuasive evidence against it. When someone says that ChatGPT “knows” what peanut butter, that’s fine as long as it’s understood that this knowing is a matter of observing “peanut butter”’s proximal relationship with jelly, bread, celery…. It is not knowing in the sense of possessing an understanding of the universe as a mechanistic system where things have meaning-bearing relationships to each other that do not stem in any way from their expression in the distribution of tokens in natural language. ChatGPT can infer from probabilistic data that peanut butter is something you eat in the production of text, but it does not, cannot know what peanut butter or eating is.

Of course, all of this is perfectly fine if you just want a program that can produce impressive text-based responses that usually effectively mimic sensible human-produced language samples. There’s a lot of potential applications for such software. I personally think the consequences will be a lot smaller than many people are saying, but I could see how such programs could disrupt some industries in ways both good and bad. But of course, our media doesn’t want to tell you “ChatGPT is an interesting and at times useful tool for producing plausibly-human responses to text prompts.” Our media wants to say “ChatGPT is artificial intelligence, ChatGPT is general intelligence, ChatGPT is sentient, the world is about to end, the Rapture is here, nothing will be the same.” No, sorry, the media doesn’t want to say that. It must say that. Every bad incentive and toxic dynamic in media contributes to the current story. A measured and careful story about large language models will never go viral. If he hadn’t been playing Cassandra for decades no one would have heard of Eliezer Yudkowsky. All of this absurd serial overestimation of how the world is going to change because of incredibly sophisticated autocomplete is driven by self-interest and greed.

People want attention; this is a way to get attention; they’re going to use this way to get attention until the moment fades and they move on to something else. And that’s why I’m going to go live in a cabin above the Arctic circle for six months.

Stunning number of commenters here who refuse to grapple with what these engines actually do. So desperate to believe in the Rapture.

"Maybe we also collect immense data sets and have guardrails and parameters installed by god-knows-who and this is how we reason." Uh.... maybe? Like maybe God exists? We have absolutely no evidence for that and good evidence against. For example, very young babies have theory of the world. They react to things that break fundamental principles of basic physical mechanics, for example. How could they have possibly assembled a data set large enough to do that in so short of a time?

All of the consciousness that we know exists in the universe - all of it - is embodied in wetware. It's stunning to see so many Cartesian mind/body dualists here who think that there is some such thing as the mind that exists independent of the brain.

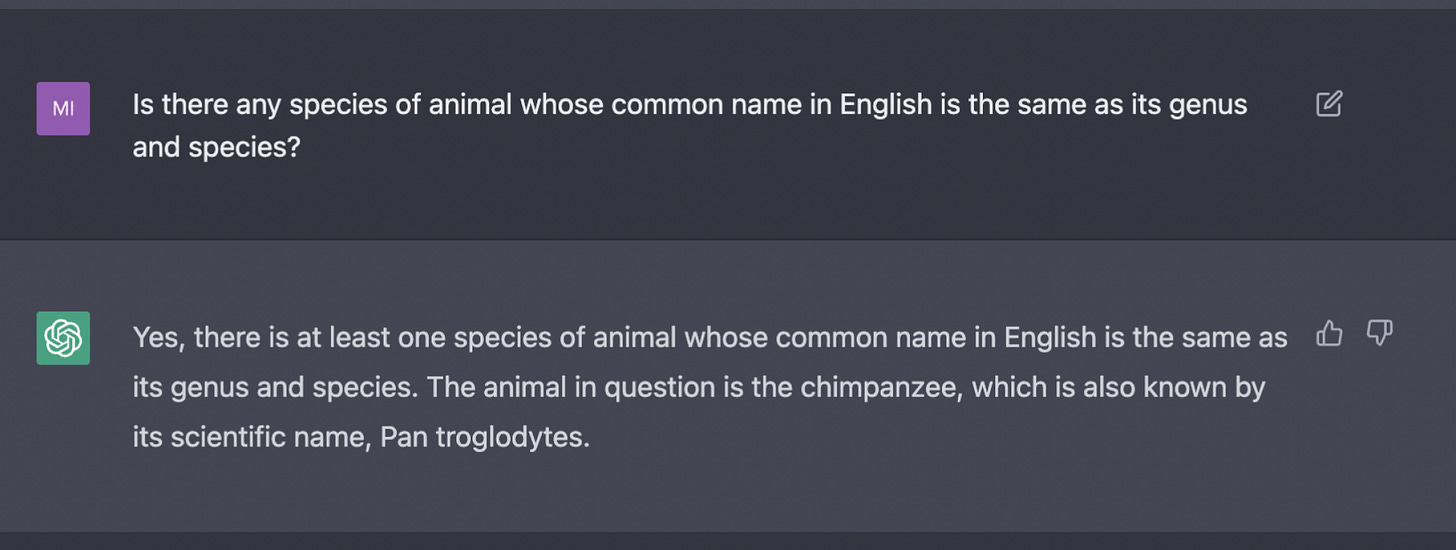

You used GPT 3.5. I tried it on GPT-4

Is there any species of animal whose common name in English is the same as its genus and species?

Yes, there is at least one example of an animal whose common name in English is the same as its genus and species. This animal is Gorilla gorilla, the western gorilla. The western gorilla is a great ape native to the forests of central Africa. Its common name, gorilla, matches both its genus (Gorilla) and species (gorilla) names in scientific classification.