Let Me Repeat Myself: The SAT's Predictive Power for College Grades is Systematically Underestimated Because of Range Restriction

Eric Levitz in New York:

This doesn’t mean that it would be good to base admissions on the SAT alone. The test’s biggest problem is that it doesn’t predict success in college very well. But as an equity matter, the SAT is one of the least-bad metrics we’ve got.

So I think it’s really important that we get this straight, as this sort of thing has somehow gone uncorrected in the media for decades: this is just wrong. It’s an understandable mistake given the dissemination of information on this topic but it’s just wrong. The SAT’s seemingly-low correlations with college GPA are a product of systematic range restriction. When adjusting for that range restriction, we find that the SAT-college GPA correlation is robust. (Same with the ACT.) This is particularly true given the modest correlations found in all kinds of human research, which is inherently noisy. What’s intensely frustrating about this is that we’ve known about this issue for forever and yet nobody updates their understanding of the test. I wrote this piece about this issue in 2017! Range restriction is a problem we know all about, and fixing it is something we do very well. The SAT is a strong predictor of college success, period. Even in grad school, where grades are notoriously inflated, entrance exams are strong predictors of success. I find the willful ignorance about this stuff so frustrating.

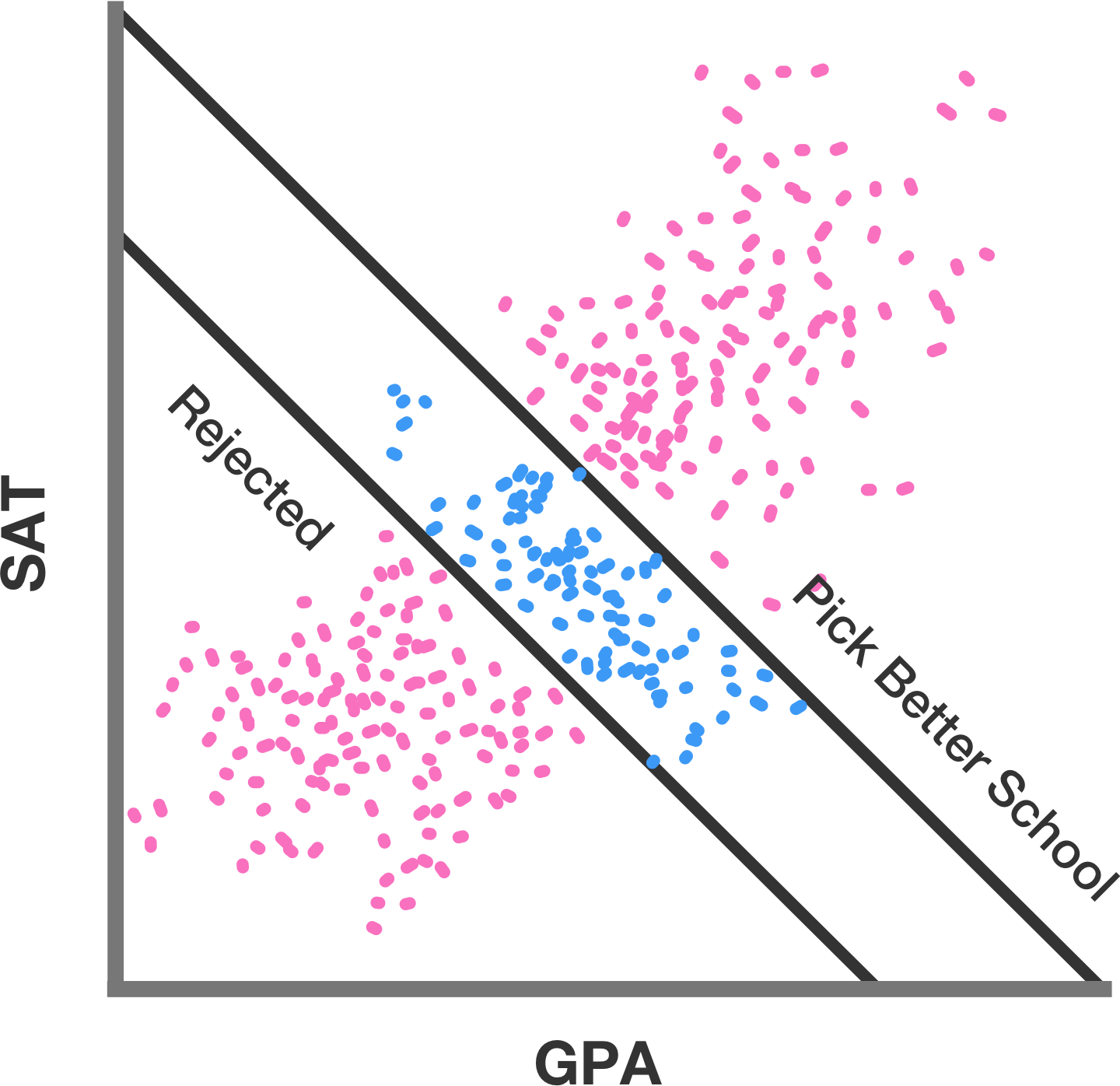

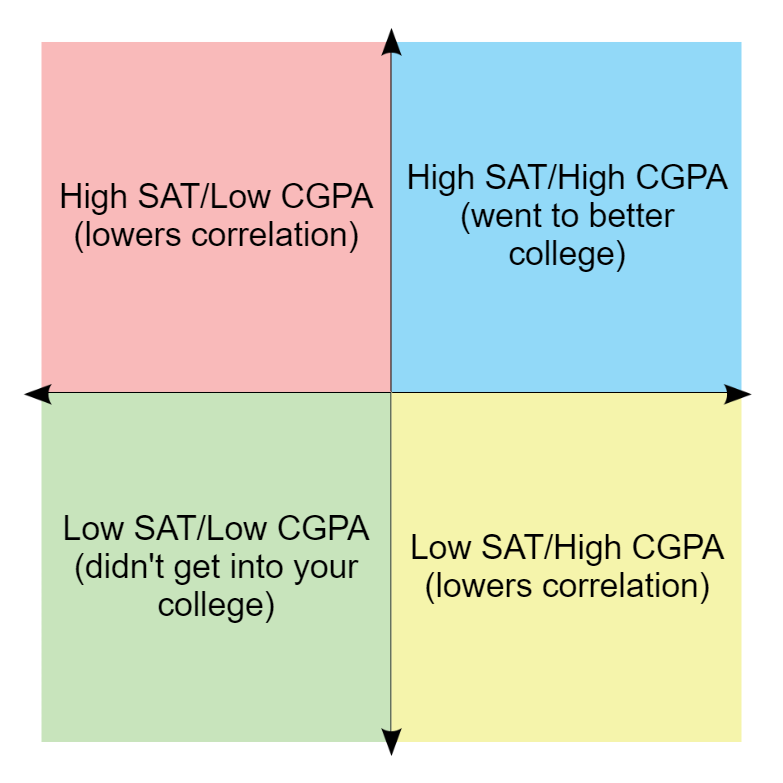

Why do raw reported SAT-college GPA correlations look low? The fundamental problem is that correlations gathered at any given college can only include students who go to that college. Students who went to better or worse colleges aren’t in your data, and students who didn’t go to college at all aren’t in anyone’s data. This is absolutely basic range restriction stuff. Here’s a crude graphic representation of the possibilities for SAT scores and college GPA.

Think about it for a minute. Pearson correlations compare continuous variables to other continuous variables, but for the sake of conceptual ease, think in terms of these quadrants. Data points where a student has a high SAT score and gets good college grades make the correlation higher, as do data points where a student has a bad SAT score and gets bad college grades. Data points where a student has a high SAT score and gets bad college grades make the correlation lower, as do data points where a student has a bad SAT score and gets good college grades. So what’s the problem? The problem is that the kinds of students who have a bad SAT score and will get bad college grades didn’t get into your college in the first place! An entire quadrant of data that would help the correlation is thus excluded. And, at some schools, the obverse is happening - students who have high SAT scores and the ability and temperament to get good college grades went to a better school and thus aren’t in your sample. Since most kids are average, many college students will have a mixture of strengths, some better at tests like the SAT but worse at grades, some the opposite. Which means that most colleges are going to have more of the kids who are stronger in one or the other. But this tells us nothing about how strong the actual correlation is. (Berkson’s paradox, folks!) Meanwhile at the most elite schools where the average admitee has something like a 1550, there are ceiling effects.

For the record, the study Levitz links to in defense of the idea that entrance exams don’t predict college success is awful. I wrote this about it in 2021:

There is such a movement to deny the predictive validity of these tests that researchers at eminently-respected institutions now appear to be contriving elaborate statistical justifications for denying that validity. Last year the University of Chicago’s Elaine Allensworth and Kallie Clark published a paper, to great media fanfare, that was represented as proving that ACT scores provide no useful predictive information about college performance. But as pseudonymous researcher Dynomight shows, this result was a mirage. The paper’s authors purported to be measuring the predictive validity of the ACT and then went through a variety of dubious statistical techniques that seem to have been performed only to… reduce the demonstrated predictive validity of the ACT. As someone on Reddit put it, the paper essentially showed that if you condition for ACT scores, ACT scores aren’t predictive. Well, yeah.

Check the Dynomight link, please. This basic statistical problem with that Allensworth and Clark paper has been well-discussed by people in educational assessment since it came out. It’s not a secret or hard to understand what they got up to. Why does that not get reported? Why does the restriction of range problem never get corrected in the media? Because they don’t want it to be corrected, for all the reasons I’ve laid out for years about media antipathy to entrance exams. They’ve convinced themselves that getting rid of the entrance exam is One Weird Trick to ending racial inequality in colleges and they’ve harbored resentment towards the SATs ever since they sweated over the test in high school.

Finally, let me say this: I would never want the SAT to perfectly predict college grades. Why? Because there’s many different types of human success and human flourishing. There are a lot of people who did shitty in college and who went on to all kinds of creative, intellectual, and political success. The beauty of the fact that high school GPA and SAT scores correlate strongly but imperfectly is that this means that they’re in broad agreement about who’s ready to go to college but also allow for wiggle in what kinds of ability they’re selecting for. And what’s truly bizarre is that this all happened in a push for “holistic admissions,” meant to consider the whole individual - but without taking account of the whole picture of academic preparedness. It’s all bizarre and a classic example of the “better look busy” school of racial progress. Bring back the SAT.

To say it a bit differently: it is probably true that the NBA scoring records of 6'8" players and 6'10" players aren't much different, but no one infers from that that height doesn't matter in the NBA.

Removing the SAT would only exacerbate class-based educational inequality. The SAT can be brute-forced via lots of studying that is available for free on the internet these days. If it were removed and changed to “holistic” measures, then knowing the right extracurriculars to have, the right sports to play, the right things to say on a college essay, the right amount of money donated to the college, etc. would become far more important. Holistic admissions are a way for the failed children of the elite to maintain their power.