OK so this doesn’t count as a real post. I’m just a little intrigued here. In the Gospel of John (the weird gospel) Jesus washes the feet of his disciples, including Judas. This has been mined to great effect, particularly by those who suggest that in fact Judas was the greatest disciple because he was the one who was willing to do what it took to provoke the sacrifice that completed the second covenant. Anyway, I was playing with an AI image generator, trying to get an image of Jesus washing someone’s feet. I had always thought that he had washed the feet of lepers specifically or poor people generally, but I can only find biblical evidence of him washing the disciples. In any event, the passage from John has long been seen as particularly evocative, and correspondingly has been a very common image in the history of Christian art, as a simple Google search will show:

And yet I can’t for the life of me get an AI to generate such an image. It’s odd.

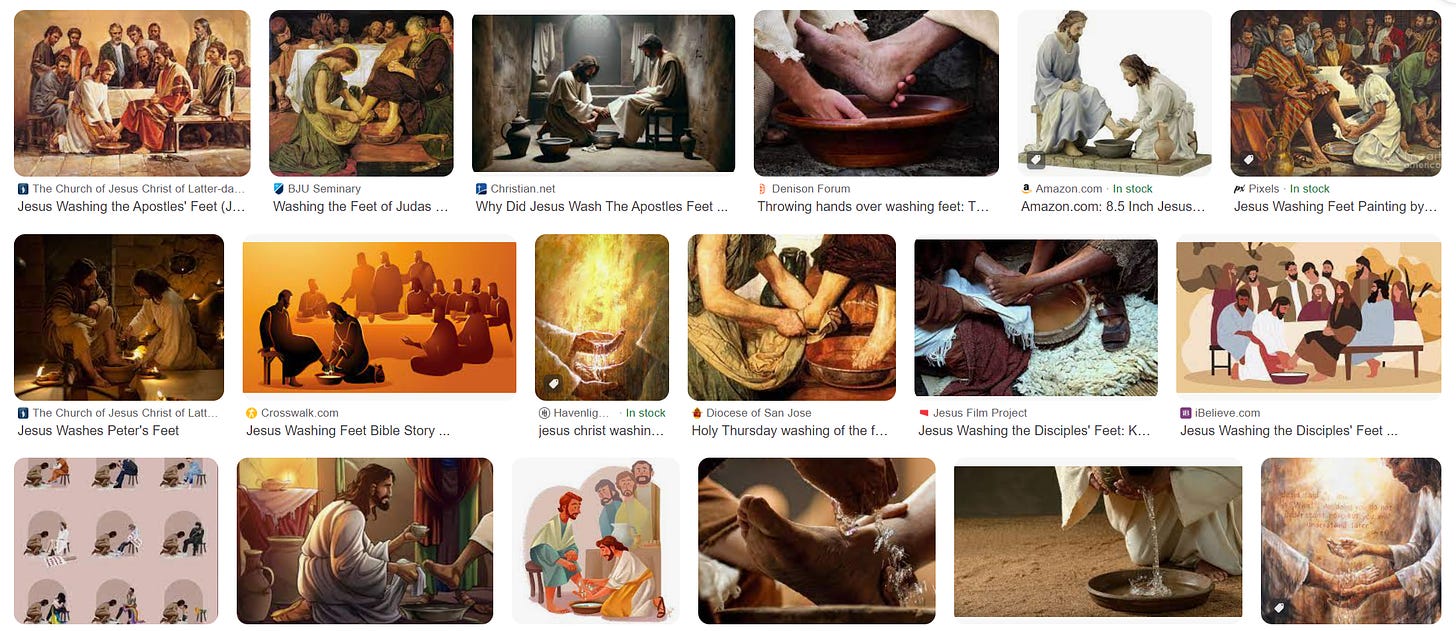

Here’s results (with various prompt tweaks) taken from Bing’s image generator, which runs on Dall-E 3:

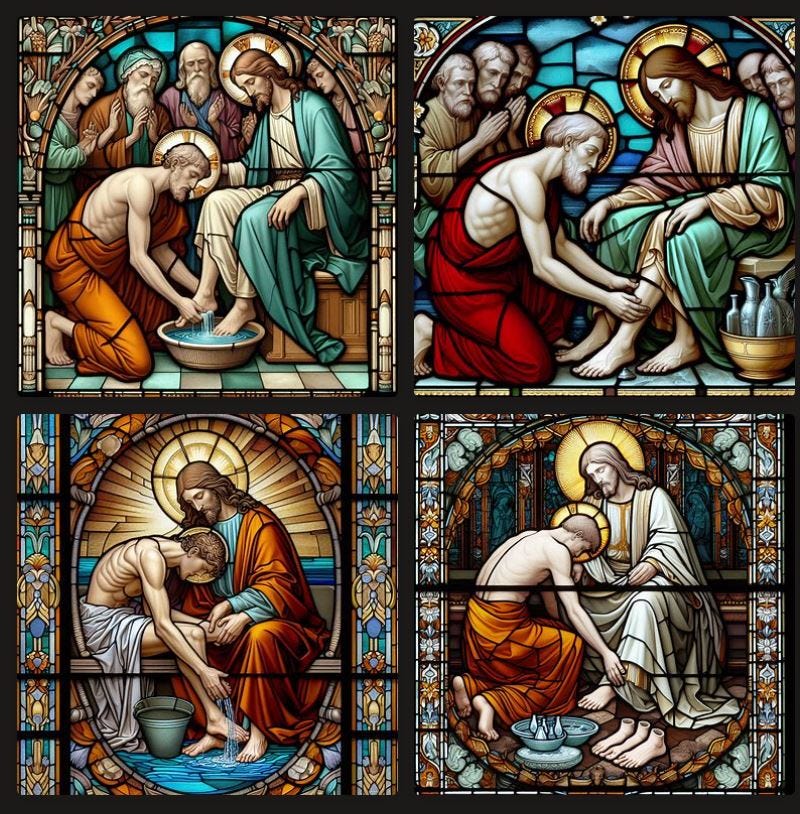

Here’s from Imagine.ART:

Stable Diffusion 2.1:

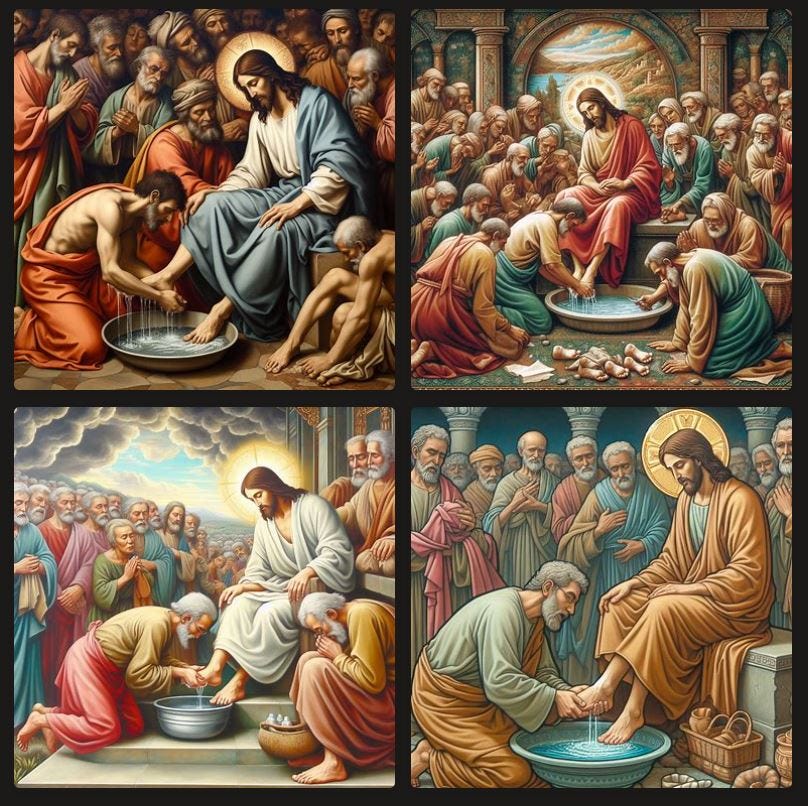

Here’s from Craiyon’s generator:

Here’s from Dall-E mini, which runs on a more primitive version of Dall-E:

I don’t have Midjourney but I’m sure some of you do, so feel free to try it out on there and report back with your findings.

I tried dozens of variations on this request without success. I’m sure enough tinkering would result in what I was asking for, but my results suggest that there’s clearly a… preference not to produce the image in question. An awful lot of the images I generated had the reversal problem you see repeatedly here - Jesus is getting his feet washed, not washing feet. It’s true that Jesus gets his feet washed in the book of Luke, but the story where Jesus does the washing is much more prominent both in Christian mythology and in art. And anyway, if these generators are as revolutionary as their marketing copy suggests they shouldn’t fall into subject-object issues like that. Other images are kind of unclear on the whole feet thing in general. (The one where someone has Jesus’s interchangeable feet laid out next to him is pretty fun.) I’m particularly surprised because there’s such a large historical artistic corpus for this prompt, which would suggest plenty of training data, at least to a layman. So… what gives?

If I were to anthropomorphize, which I shouldn’t, I might guess that they’re thinking “Jesus is a leader, so he’d be getting his feet washed by his followers, not the other way around.” Alternatively, I allllllmost wonder if this is a product of the kind of guardrails they’ve had to put on these tools so that they’re not offensive; maybe, somehow, their parameters suggest that it would be offensive to portray Jesus in a subservient position. Which is funny because, you know, the whole thing about Jesus was his disinterest in worldly concerns about status.

Then again, here’s Google Gemini responding to the prompt “Generate an image of a triangle”:

One for nine, and even the one hit has a lot of extra faff I didn’t ask for. So maybe I’m just overthinking it and asking too much.

I am willing to bet your query is running afoul of "don't generate images someone might find offensive" hacking.

Putting aside the PC possibility the likely issue is that LLMs (on which the art programs are based) derive their data from associations between words in a sequence in the texts they scrape. They do not use logic and they have no grasp of cause and effect. A clue here is that as you note, the story is in Luke, but the idea is in pictures (that LLMs don't scrape) and mythology, which may be characterized by their makers as low-authority sources, while the Bible is, well, THE BIBLE, on religion, so it's well scraped and there will be a powerful Jesus - wash association, with no indication of who washed whom. Its data indicate Jesus is important, and so the washing - Jesus association will be just that "x washes Jesus." Please share your post with fellow Substacker Gary Marcus, who can explain more fully. He has discussed such failures before, but the one you have found is especially interesting due to its consistency across prompts and programs. It's a great example of AI failure.