No one ever told me what almond butter is. When I first heard the term, I didn’t look it up on Google or in a dictionary. I didn’t need to. My brain possesses a theory of the world, that is, an understanding of basic facts about the universe and how it operates and an apprehension of the mechanistic and consequential relationships between those facts. I know that almonds are a kind of legume and also fall under the category of food, and that peanuts are a kind of legume and food, and that peanuts get turned into peanut butter, and I extrapolated from those facts to assume that almond butter is a product like peanut butter but made from almonds. And I was right. The term “almond butter” slid into my theory of the world seamlessly and without effort. I’m in possession of a cognitive engine that has a theory of food, and of botany, and of economics, and of nutrition, and of physics, and they all fit together with processes I never had to learn.

Are those processes perfect? Of course not. I could have heard the term “shea butter,” made a similar intuitive leap, concluded that shea butter is a food like peanut butter, and been wrong. The point is not that my theory of the world produces perfect outcomes, but that it derives logical principles from observation and then arrives at rational conclusions based on those observations. It might be said to be probabilistic in the sense that I’m assuming that almond butter is very likely similar to peanut butter. But I’m not thinking probabilistically like a large language model by looking at a database of proximal relationships between terms and deriving a probabilistic response string that is not in any sense dependent on deterministic, logical deduction. I didn’t locate the words “almond” and “butter” and the colocation “almond butter” in an incomprehensibly vast training set and generate a likely textual response. I thought “hey, almond butter is probably a very similar thing to peanut butter.”

One of the most common critical responses to my last post on LLMs, and one of the most wrongheaded, was some version of “how do you know that we don’t think the same way?” In the weaker form of this, a noncommittal “well we don’t know that we don’t think the same way,” you’re leveraging our ignorance about our own cognition as a way to open space for a “hey, anything’s possible” non-argument. But of course our lack of understanding about human cognition should leave us more skeptical of strong AI claims, lead us away from the hubris about this topic that’s consumed our media. The stronger form is a claim that our brains really do work just like LLMs. Which… really? If that were true, then when my brain asked itself “what is almond butter?,” I consulted an unfathomably large set of texts, analyzed the proximal relations between that term and billions of others, and in so doing created a web of relationships that cannot be said to constitute knowing but which enabled me to generate a string of text in return that was statistically likely to resemble a coherent response. Does that sound remotely plausible to you?

If nothing else, such a process would be incredibly cognitively inefficient. Unlike a large language model, which has only those electricity-rationing guardrails that are actually affirmatively programmed into it, a brain has an inherent tendency towards efficient use of energy. It has to; all biological systems, to one degree or another, have an inherent bias towards energy efficiency because energy efficiency increases fitness in the evolutionary sense. Physiological systems don’t have the luxury of vast server farms operating with essentially no resource constraints. So they have to do things more efficiently. A large language model can store an effectively unlimited amount of text information, and that information never degrades, and its creator will supply it with all the energy it needs. Brains don’t have those advantages. But that exact same edge driven by natural selection is why brains can do all the things they can do - including creating large language models that produce outputs that plausibly appear to be the result of an intelligence, albeit a formulaic, tepid, middle-of-the-road intelligence, which is exactly what you’d expect from what is essentially the averaging of large textual data sets.

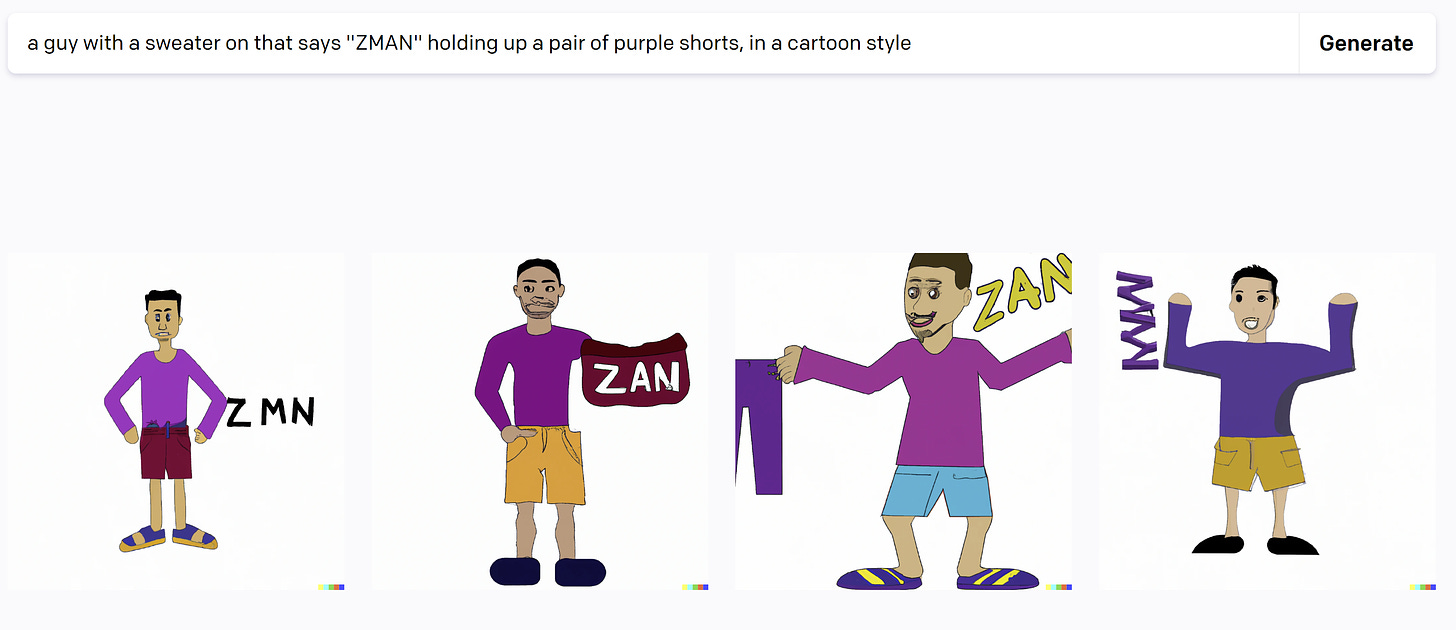

Look at the Dall-E output at the top. You will immediately notice that none of the four images actually satisfy the dictates of the prompt. That’s because they’re statistically likely interpretations of a given text string based on training data sets of immense size. That process is impossibly complicated, but it’s inherently and unalterably probabilistic - which is why it can return results that look generally like they’re in the vicinity of correct but aren’t. It’s the same reason why ChatGPT is so prone to answering historical questions with answers that appear superficially plausible but are factually untrue: ChatGPT does not understand what a fact is. It has the term “fact” and similar in its matrix of distributional semantic data but at the end of every connection is just another word that is itself defined by proximal relations to other words. LLMs don’t “understand” at all. They’re engineered instruments that develop sets of probabilistic responses based on proximal relationships between terms in training data. Dall-E didn’t notice that the response failed to fulfill the prompt because Dall-E has no noticer. It doesn’t possess the kind of rational mind that can assess the fact that the prompt has not been satisfied. If Dall-E was capable of “knowing” that it had answered the prompt incorrectly… it wouldn’t have answered the prompt incorrectly in the first place.

And this, again, is the essential point. I have little doubt that Dall-E will improve to the point where it won’t make those mistakes. If you want to tell me that one of the other big image generators wouldn’t make that mistake, I would believe you. But generating a correct result where it once generated an incorrect result would not change the underlying reality at all! And that’s a point that people just refuse to understand, I think because they don’t want to understand: the accuracy of the output of a machine learning system can never prove that it thinks, understands, or is conscious. They’re totally separate questions. Every time someone points out that these current “AI” systems are not in fact thinking similarly to how a human does, AI enthusiasts point to improving results and accuracy, as if this rebuts the critiques. But it doesn’t rebut them at all. If someone says that a plane does not fly the same way as an eagle, and you sniff that a plane can go faster than an eagle, you’re willfully missing the point.

Years back, Douglas Hofstadter (of Godel, Escher, Bach fame) worked with his research assistants at Indiana University, training a program to solve jumble puzzles like a human. By jumble puzzles I mean the game where you’re given a set of letters and asked to arrange those letters into all of the possible word combinations that you can find. This is trivially easy for even a primitive computer - the computer just tries every possible combination of letters and then compares them to a dictionary database to find matches. This is a very efficient way to go about doing things; indeed, it’s so efficient as to be inhuman. Hofstadter’s group instead tried to train a program to solve jumbles the way a human might, with trial and error. The program was vastly slower than the typical digital way, and sometimes did not find potential matches. And these flaws made it more human than the other way, not less. The same challenge presents itself to the AI maximalists out there: the more you boast of immensely efficient and accurate results, the more you’re describing an engineered solution, not one that’s similar to human thought. We know an immense amount about the silicon chips that we produce in big fabs; we know preciously little about our brains. This should make us far more cautious about what we think we know about AI.

I find it very odd how many people get defensive about this, that take AI skepticism personally. There was a fair bit of that in the comments to the last post. And the hype train is at this point as overheated and absurd as any in my adult life. The guys over at Marginal Revolution, well-established and credentialed economists, have thrown themselves into total, full-blown AI lunacy, just unhinged speculation about the immanentizing of the eschaton with no probity or restraint. But skepticism at the idea that in ~60 years human beings have replicated human intelligence is immensely sensible. Consciousness is the product of 4 billion years of evolution, which is one of the most powerful forces in the known universe. Consciousness is literally embodied, found in organic beings, carbon forms that maintain homeostasis. We assume consciousness is the product of the brain, but we don’t really know. It may very well be that (say) the liver is implicated in consciousness. Some very serious people believe that consciousness is the product of quantum effects somehow generated by neurological structures. There is no shame, at all, in admitting that we are not yet at the level of understanding this stuff, let alone replicating it.

Of course, none of this means that these LLMs and similar probabilistic AI-like models can’t do cool shit. They can do very cool shit, and I’ve never said otherwise. What we might want to ask is why so many people are so invested in believing that they can do more than cool shit.

The recurring question: why are so many otherwise reasonable people so emotionally invested in the idea that we've "invented fire" again?

I don't necessarily agree with the AI hype, but this does not show an actual understanding of how LLMs work.

It is true that LLMs are *trained* on a vast corpus of text, but when an LLM is completing prompts, it does not have direct access to any of that corpus. We don't know the size of GPT-4, but GPT-3 is only about 800GB in size.

GPT-3 is therefore NOT just looking up relevant text samples and performing statistical analysis - it does not have access to all that training data when it is completing prompts. Instead, it has to somehow compress the information contained in a vast training corpus into a relatively tiny neural net, which is then used to respond to prompts. Realistically, the only way to compress information at that level is to build powerful abstractions, i.e. a theory of the world.

Now, the theories that GPT comes up with are not really going to be theories of the physical world, because it has zero exposure to the physical world. They're probably more like theories about how human language works. But whatever is going on in there has to be much richer than simple statistical analysis, because there simply isn't enough space in the neural net to store more than a tiny fraction of the training corpus.