Tech Reviewers Write Reviews for Each Other, Not for the Public

and welcome to the Everything is Fine (really) era of tech

This post reflects beliefs about empirical questions that I can’t prove, and I recognize that the criticized reviewing behavior isn’t confined to the tech press. You have been warned. (But I’m right.)

Apple debuted its indisputably better, debatably revolutionary M1 chip in 2020. M-series processors are Apple-specific, ARM-based, TSMC-manufactured RISC chips. (Got that?) And they earned some deserved fanfare for their combination of performance and, especially, heat and battery efficiency. Since then, Apple has released the M2, M3, and (only in the iPad thus far) M4. In light of this progress, the tech press - which has operated for my entire adult life as an unpaid marketing arm for Apple - has often declared that Windows (and I guess Linux) laptops just can’t win, that the efficiency of RISC computing give Apple an insurmountable lead. But! Qualcomm, heretofore mostly known as a manufacturer of chips for smartphones, has come out with its own RISC-based M-whatever competitor chip, the Snapdragon X Elite. The first laptops to feature this chip are just being released, and they look to be quite competitive with Apple’s silicon. For the record, both Microsoft and Apple have also recently touted their advantage in on-device machine learning “AI” tech, the benefits of which remain vague and unclear to me. Either way, the cold war continues.

Here’s the thing that I can’t prove but feel very strongly is true: almost nobody needs this shit.

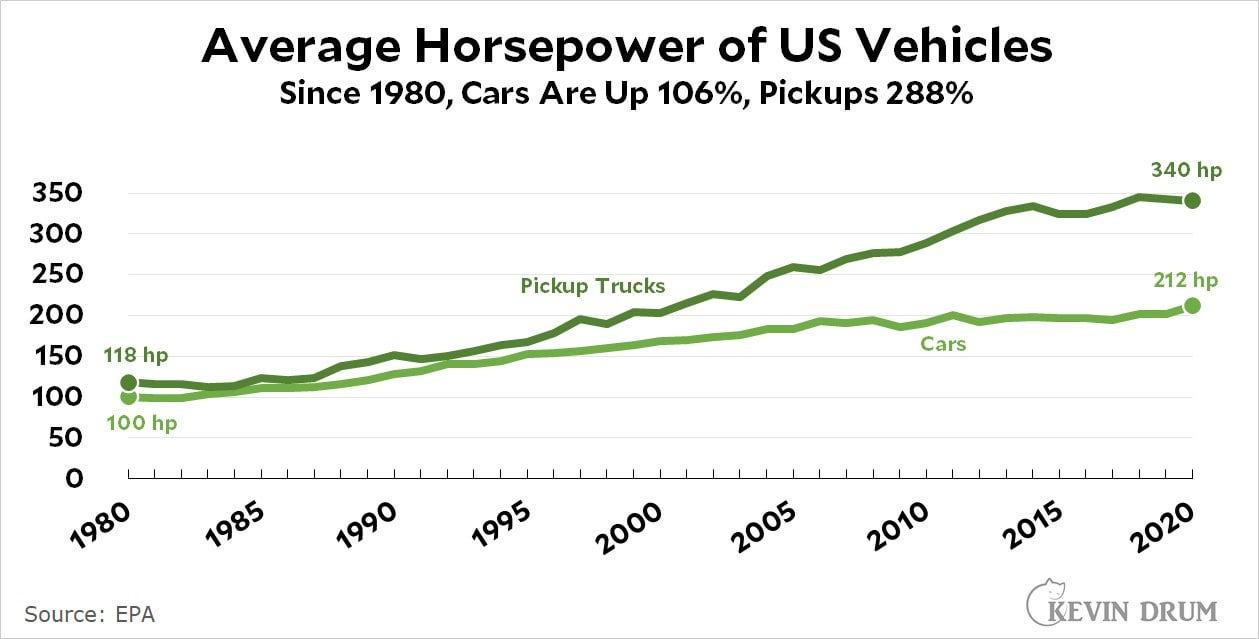

This notion that Apple forever changed the computing game with the M line, and that Microsoft has maybe mostly caught up with their new Qualcomm chips, and that this is all very important - that all depends on the idea that the average user will benefit. It depends on the assumption that the average Mac user was walking around in 2019 deeply limited by their Macbook’s silicon, that someone who bought an Intel-based x86 Dell XPS last year is struggling with it. And I’m here to suggest that this simply isn’t true. My bet is that the vast majority of the users of those pre-M Macbooks, and the users of x86 Intel-or-AMD Windows laptops now, will never notice an appreciable difference. Because I strongly believe that laptops and compute are like cars and horsepower: the manufacturers market based on max output because that’s a big shiny number customers can fixate on (NUMBER GO UP), but for most daily drivers, the max means essentially nothing because they’re never coming close to using it all.

It’s a very well-worn observation that Americans buy giant pickup trucks and then never use them for anything but rides to soccer practice and trips to Costco; there’s a similar dynamic with the American engine, where family sedans now have more horsepower than some classic muscle cars but that horsepower is never accessed. And since cars are getting heavier, too, improvements to acceleration from a stop (vastly more meaningful for actual driving than top speed) are muted. Even there, who’s really struggling with insufficient giddyup? My Maxda CX-5 gets me off the line just fine, certainly enough to do things that actual drivers actually do, like make a left hand turn in front of approaching traffic or pass a slow-moving vehicle on the interstate. A not-powerful-at-all car, in the 2020s, has more than enough horses to drive safely and effectively in real-world conditions. It’s similar with CPUs/SoCs and laptops - marketing and reviews overemphasize performance to a degree that just doesn’t match the lived experience of most consumers. I’m willing to wager that a large majority of users essentially never use all of their processing power, very rarely chew up all of their available RAM, and rarely burn all of their battery.