Study of the Week: Rebutting Academically Adrift with Its Own Mechanism

It's a frustrating fact of life that arguments that are most visible are always going to be, for most people, the arguments that define the truth. I fear that's the case with Academically Adrift, the 2011 book by Richard Arum and Joseph Roksa that has done so much to set the conventional wisdom about the value of college. That book made incendiary claims about the limited learning that college students are supposedly doing Many people assume that the book's argument is the final word. There are in fact many critical words out there on its methodology, or the methodology we're allowed to see. (One of the primary complaints about the book is that the authors hide the evidence for some of their claims.) Richard Haswell's review, available here, is particularly cogent and critical:

They compared the performance of 2,322 students at twenty-four institutions during the first and fourth semesters on one Collegiate Learning Assessment task. Surprisingly, their group as a whole recorded statistically significant gain. More surprisingly, every one of their twenty-seven subgroups recorded gain. Faced with this undeniable improvement, the authors resort to the Bok maneuver and conclude that the gain was “modest” and “limited,” that learning in college is “adrift.” Not one piece of past research showing undergraduate improvement in writing and critical thinking—and there are hundreds—appears in the authors’ discussion or their bibliography....

What do they do? They create a self-selected set of participants and show little concern when more than half of the pretest group drops out of the experiment before the post-test. They choose to test that part of the four academic years when students are least likely to record gain, from the first year through the second year, ending at the well-known “sophomore slump.” They choose prompts that ask participants to write in genres they have not studied or used in their courses. They keep secret the ways that they measured and rated the student writing. They disregard possible retest effects. They run hundreds of tests of statistical significance looking for anything that will support the hypothesis of nongain and push their implications far beyond the data they thus generate.

There are more methodological critiques out there to be found, if you're interested.

Hoisted By Their Own Petard

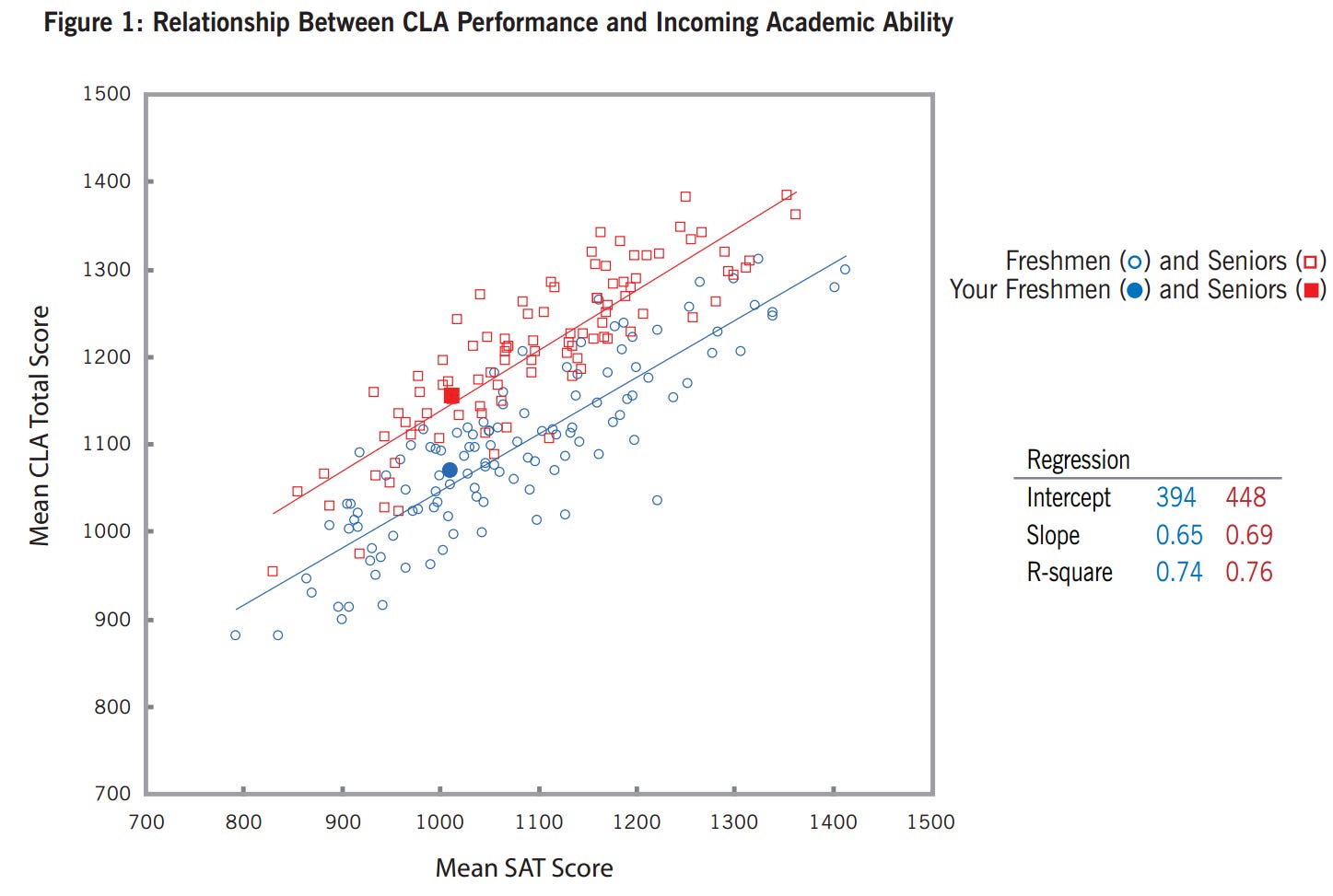

But let's say we want to be charitable and accept their basic approach as sound. Even then, their conclusions are hard to justify, as later research using the same primary mechanism found much more learning. Academically Adrift utilized the Collegiate Learning Assessment (CLA), a test of college learning developed by the Council for Aid to Education (CAE). I wrote my dissertation on the CLA and its successor, the CLA+, so as you can imagine my thoughts on the test in general are complex. I can come up with both a list of things that I like about it and a list of things that I don't like about it. For now, though, what matters is that the CLA was the primary mechanism through which Arum and Roksa made their arguments. Yet research with a far larger data set and undertaken using the freshman-to-senior academic cycle that the CLA was intended to use has shown far larger gains than those reported by Arum to Roksa.

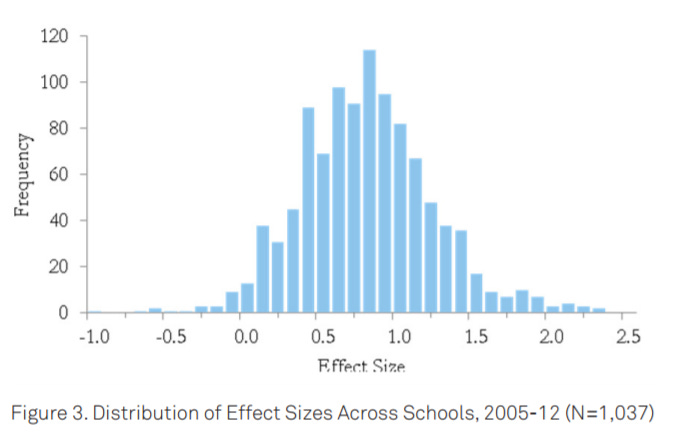

This report from CAE, titled "Does College Matter?" and this week's Study of the Week, details research on a larger selection of schools than that measured in Academically Adrift. In contrast to the .18 SAT-normed standard deviation growth in performance that Arum and Roksa found, CAE find an average growth of .78 SAT-normed standard deviations, with no school demonstrating an effect size of less than .62. Now, if you don't work in effects sizes often, you might not find this a particularly large increase, but as an average level of growth across institutions, that's in fact quite impressive. The institutional average score grew on the CLA's scale, which is quite similar to that of the SAT and also runs from 400 to 1600, by over 100 points. (For contrast, commercial tutoring programs for tests like the SAT and ACT rarely exceed 15 to 20 points, despite the claims of the major prep companies.)

The authors write:

This stands in contrast to the findings of Academically Adrift (Arum and Roska, 2011) who also examined student growth using the CLA. They suggest that there is little growth in critical thinking as measured by the CLA. They report an effect size of .18, or less than 20% of a standard deviation. However, Arum and Roska used different methods of estimating this growth, which may explain the differences in growth shown here with that reported in Academically Adrift.... The Arum and Roska study is also limited to a small sample of schools that are a subset of the broader group of institutions that conduct value-added research using the CLA, and so may not be representative of CLA growth in general.

To summarize: research undertaken with the same mechanism as used in Academically Adrift and with both a dramatically larger sample size and a sample more representative of American higher education writ large contradicts the book's central claim. It would be nice if that would seep out into the public consciousness, given how ubiquitous Academically Adrift was a few years ago.

Of course, the single best way to predict a college's CLA scores is with the SAT scores of its incoming classes... but you've heard that from me before.

Motivation Matters

There's a wrinkle to all of these tests, and a real challenge to their validity.

A basic assumption of educational and cognitive testing is that students are attempting to do their best work; if all students are not sincerely trying to do their best, they introduce construct-irrelevant variance and degrade the validity of the assessment. This issue of motivation is a particularly acute problem for value-added metrics test the CLA, as students who apply greater effort to the test as freshmen than they do as seniors would artificially reduce the amount of demonstrated learning.

At present, the CLA is a low stakes test for students. Unlike with tests like the SAT and GRE, which have direct relevance to admission into college and graduate school, there is currently no appreciable gain to be had for individual students from taking the CLA. Whatever criticisms you may have of the SAT or ACT, we can say with confidence that most students are applying their best effort to them, given the stakes involved in college admissions. Frequently, CLA schools have to provide incentives for students to take the test at all, which typically involve small discounts on graduation-related fees or similar. The question of student motivation is therefore of clear importance for assessing the test’s validity. The developers of the test apparently agree, as in their pamphlet “Reliability and Validity of CLA+,” they write “low student motivation and effort are threats to the validity of test score interpretations."

In this 2013 study, Ou Lydia Liu, Brent Bridgeman, and Rachel Adler studied the impact of student motivation on ETS’s Proficiency Profile, itself a test of collegiate learning and a competitor to the CLA+. They tested motivation by dividing test takers into two groups. In the experimental group, students were told that their scores would be added to a permanent academic file and noted by faculty and administrators. In the second group, no such information was delivered. The study found that “students in the [experimental] group performed significantly and consistently better than those in the control group at all three institutions and the largest difference was .68 SD." That's a mighty large effect! And so a major potential confound. It is true that the Proficiency Profile is a different testing instrument than the CLA, although Oiu, Bridgeman, and Adler suggest that this phenomenon could be expected in any test of college learning that is considered low stakes. The results of this research were important enough that CAE's Roger Benjamin, in an interview with Inside Higher Ed, said that the research “raises significant questions” and that the results are “worth investigating and [CAE] will do so."

Now, in terms of test-retest scores and value added, the big question is, do we think motivation is constant between administrations? That is, do we think our freshman and senior cohorts are each working equally hard at the test? If not, we're potentially inflating or deflating the observed learning. Personally, I think first-semester freshmen are much more likely to work hard than last-semester seniors; first-semester freshmen are so nervous and dazed you could tell them to do jumping jacks in a trig class and they'd probably dutifully get up and go for it. But absent some valid and reliable motivation indicator, there's just a lot of uncertainty as long as students are taking tests that they are not intrinsically motivated to perform well on.

Disciplinary Knowledge - It's Important

Let's set aside questions of the test's validity for a moment. There's another reason not to think that modest gains on a test like this are reason to fret too much about the degree of learning on campus: they don't measure disciplinary knowledge and aren't intended to.

That is, these tests don't measure (and can't measure) how much English an English major learns, whether a computer science student can code, what a British history major knows about the Battle of Agincourt, if an Education major will be able to pass a state teacher accreditation test.... These are pretty important details! The reason for this omission is simple: because these instruments want to measure students across different majors and schools, content-specific knowledge can't be involved. There's simply too much variation in what's learned from one major to the next to make such comparisons fruitful. But try telling a professor that! "Hey, we found limited learning on college campuses. Oh, measuring the stuff you actually teach your majors? We didn't try that." This is especially a problem because late-career students are presumably most invested in learning within their major and getting professionalized into a particular discipline.

I do think that "learning to learn," general meta-academic skills, and cross-disciplinary skills like researching and critically evaluating sources are important and worth investigating. But let's call tests of those things tests of those things instead of summaries of college learning writ large.

Neither Everything Nor Nothing

I have gotten in some trouble with peers in the humanities and social sciences in the past for offering qualified defenses of test instruments like the CLA+. To many, these tests are porting the worst kinds of testing mania into higher education and reducing the value of college to a number. I understand these critiques and think they have some validity, but I think they are somewhat misplaced.

First, it's important to say that for all of the huffing and puffing of presidential administrations since the Reagan White House put out A Nation at Risk, there's still very little in the way of overt federal pressure being placed on institutions to adopt tests like this, particularly in a high-stakes way. We tend to think of colleges and universities as politically powerless, but in fact they represent a powerful lobby and have proven to be able to defend their own independence. (Though not their funding, I'm very sorry to say.)

Second, I will again say that a great deal of the problem with standardized testing lies in the absurd scope of that testing. That is, the current mania for testing has convinced people that the only way to test is to do census-style testing - that is, testing all the students, all the time. But as I will continue to insist, the power of inferential statistics means that we can learn a great deal about the overall trends in college learning without overly burdening students or forcing professors to teach to the test. Scaling results up from carefully-collected, randomized and stratified samples is something that we do very well. We can have relatively small numbers of college students taking tests a couple times in their careers and still glean useful information about our schools and the system.

Ultimately, I think we need to be doing something to demonstrate learning gains on college. Because the university is threatened. We have many enemies, and they are powerful. And unless we can make an affirmative case for learning, we will be defenseless. Should a test like the CLA+ be the only way we make that case? Of course not. Instead, we should use a variety of means, including tests like the CLA+ or Proficiency Profile, disciplinary tests developed by subject-matter experts and given to students in appropriate disciplines, faculty-led and controlled assessment of individual departments and programs, raw metrics like graduation rate and time-to-graduation, student satisfaction surveys like the Gallup-Purdue index, and broader, more humanistic observations of contemporary campus life. These instruments should be tools in our toolbelt, not the hammer that forces us to see a world of nails. And the best data available to us that utilizes this particular tool tells us the average American college is doing a pretty good job.